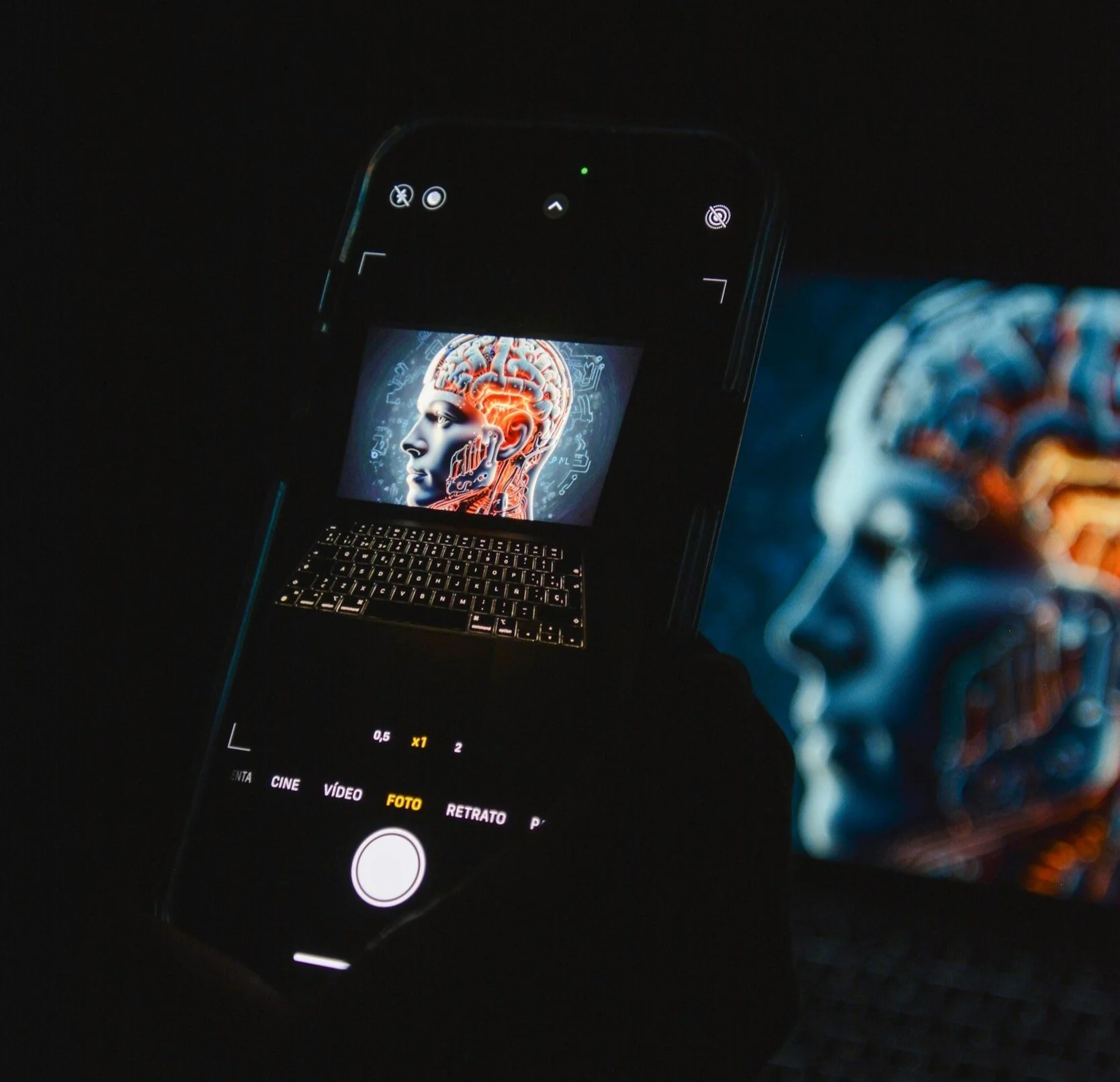

Rule of AI vs. Rule by AI: Will AI Serve Humanity or Enable State Control?

Image Credit: Aidin Geranrekab | Splash

As artificial intelligence continues to redefine industries, a critical debate is unfolding: Should AI be developed and deployed as a tool for the good of all people (Rule of AI), or should it be a mechanism for state control and societal regulation (Rule by AI)? This distinction mirrors the contrast between Rule of Law and Rule by Law, where one upholds fairness and accountability, while the other consolidates power.

AI is now deeply embedded in decision-making processes across governance, law enforcement, finance, and social systems. The way AI is integrated into these domains will determine whether it serves the people or the state—a fundamental question shaping the future of digital governance.

[Read More: Defining AI: What Is Intelligence and Are Robots Truly Intelligent?]

Defining Rule of AI vs. Rule by AI

Rule of AI refers to AI being a tool used by governments to benefit all people, much like the Rule of Law, which ensures fairness, justice, and transparency. AI is deployed to enhance public services, optimize decision-making, and uphold democratic values while maintaining ethical safeguards and human oversight.

Rule by AI means AI is a tool for states to control and govern their people, similar to Rule by Law, where laws are enforced primarily for political or authoritarian stability rather than for the good of society. In this model, AI dictates decisions with limited human oversight, prioritizing state control, surveillance, and behavioural regulation over individual freedoms.

[Read More: The Global AI Battle: Why the West Is Targeting China’s AI Industry]

The Ethical and Practical Dilemmas

Public Good vs. Political Control:

Rule of AI: Utilizing AI to enhance public welfare is a recognized approach. For instance, publicly developed AI systems can democratize technology, ensuring it serves the public interest.

Rule by AI: Conversely, AI can be employed for extensive state control. Some governments implement AI-driven monitoring systems, including real-time behavioural tracking and predictive analysis, exemplifying its use in enforcing authority rather than empowering individuals.

Accountability and Transparency:

Rule of AI: Ensuring AI decisions are subject to human oversight is crucial. The concept of "Public Constitutional AI" advocates for public engagement in designing AI systems, promoting transparency and accountability.

Rule by AI: AI systems can lead to opaque decision-making processes. The use of algorithms in governance, often termed "government by algorithm", can result in decisions that are difficult for citizens to understand or challenge.

Surveillance vs. Privacy:

Rule by AI: AI-powered surveillance tools, such as predictive policing and automated scoring systems (e.g., social credit system), are increasingly used by governments, raising concerns about privacy and potential discrimination.

Rule of AI: Advocating for AI applications that enhance privacy rights is essential. The European Union's guidelines, for example, prohibit AI systems that manipulate users or exploit vulnerabilities, aiming to protect individual rights.

Job Displacement and Social Inequality:

Rule of AI: AI-driven automation can improve efficiency and has the potential to democratize technology, ensuring it serves the public interest.

Rule by AI: Without proper governance, AI can exacerbate social inequalities. The use of AI in surveillance and predictive policing can disproportionately affect marginalized communities, leading to further disenfranchisement.

Predicting and Managing Social Behavior: A Double-Edged Sword

AI’s ability to predict and manage social behaviour is both a powerful tool and a potential threat. On one hand, predictive AI can be used to enhance public safety, optimize urban planning, and improve healthcare outcomes by anticipating societal needs. Governments and organizations can use AI to detect patterns in criminal activity, prevent the spread of diseases, and allocate resources more efficiently. However, when deployed without ethical constraints, predictive AI can lead to overreach—allowing states to manipulate public behavior, enforce rigid social norms, and suppress dissent. The question remains: Should societies embrace AI’s predictive capabilities for governance, or does this pave the way for an era of algorithmic control? Striking a balance between beneficial social management and personal freedoms will be one of the defining challenges of AI governance.

[Read More: AI Achieves Self-Replication: A Milestone with Profound Implications]

Censorship and Political Control: A Necessary Measure or a Suppression of Free Speech?

Censoring politically sensitive material is one of the most controversial applications of AI governance. Proponents argue that content moderation and censorship can prevent the spread of misinformation, hate speech, and extremist propaganda, protecting social stability. However, when AI-driven censorship is used as a tool of political suppression, it raises significant ethical concerns. In nations where AI enforces state narratives, dissenting voices can be silenced, and public discourse can be manipulated. The challenge lies in distinguishing between legitimate content regulation that safeguards democratic values and authoritarian censorship that stifles free expression. As AI becomes more embedded in content moderation, societies must grapple with the question: Should AI determine what information is permissible, and if so, who decides its boundaries?

AI in Military and Cyber Warfare: A Necessary Defense or a Threat to Global Stability?

The integration of AI into military applications and cyber warfare presents one of the most complex ethical challenges of our time. On one hand, AI-powered defense systems, such as autonomous drones and cybersecurity protocols, can strengthen national security and minimize human casualties in warfare. AI-driven threat detection can preempt cyberattacks, protecting critical infrastructure from hostile actors. However, the increasing reliance on AI in military operations raises serious concerns. Autonomous weapon systems, if left unchecked, could act without human intervention, leading to unintended escalations and conflicts. Additionally, the use of AI for cyber warfare—such as AI-generated deepfakes, misinformation campaigns, and automated hacking—poses a significant threat to global stability. The world must confront the question: should AI be harnessed for defense, or does its role in warfare ultimately introduce more risks than protections? Striking a balance between national security and ethical considerations will be crucial in shaping the future of AI-driven military applications.

Ensuring AI Serves the Public Good

Governments must use AI to empower citizens, not control them. AI should be developed and implemented in ways that benefit public welfare, ensuring it does not become a tool for mass surveillance and social regulation.

Transparent AI policies are essential. Governments should mandate audits, ethical AI training, and accountability frameworks to prevent abuses.

Human oversight must remain central. AI should never replace democratic governance or human rights protections. Clear legal frameworks must define AI’s role in policymaking and enforcement.

Global cooperation is necessary. Countries must work together to ensure AI is aligned with fundamental human rights rather than becoming a means of oppression.

AI-driven decision-making must be fair and unbiased. Measures must be taken to eliminate algorithmic bias and discrimination, ensuring AI supports equality and inclusivity.

[Read More: Superintelligence: Is Humanity's Future Shaped by AI Risks and Ambitions?]

AI Governance as a Spectrum

The distinction between Rule of AI and Rule by AI is not always absolute. Rather, it exists on a spectrum where different nations find themselves at various points. Some regions prioritize ethical AI governance, ensuring transparency and fairness, while others implement AI as a means of control and regulation. Many developing nations find themselves in the middle, balancing AI’s economic potential with necessary safeguards. There is no universal model that fits all societies, as governance structures, political ideologies, and cultural perspectives influence how AI is deployed. But if we extend this idea further, should AI governance transcend national borders? Should AI be a global public good, accessible to all people regardless of nationality? The idea of a borderless world where AI benefits all humanity is a radical concept—but one that raises profound questions about digital equality and international cooperation.

[Read More: Global AI Regulations 2025: U.S., EU, China, Brazil, Israel and Australia in Focus]

Source: Brookings.edu, Atlantic Council, arXiv, Wikipedia, Reuters, European Commission, AP news, Big Data China