OpenAI ChatGPT o3-mini – Data Cutoff Date in 2021, Three Years Before 4o?

Image Credit: Dima Solomin | Splash

On January 31, 2025, OpenAI introduced o3-mini, a new compact and cost-efficient AI model optimized for reasoning and STEM-related tasks. With improved speed, accuracy, and lower latency, o3-mini is set to replace o1-mini in ChatGPT and API offerings. This model is now accessible through both ChatGPT and the API, offering enhanced capabilities in science, technology, engineering, and mathematics (STEM) domains. Building upon the foundation laid by its predecessors, o3-mini aims to deliver high performance while maintaining cost efficiency and reduced response times.

[Read More: ChatGPT Pro vs. Plus: Is OpenAI's $200 Plan Worth the Upgrade?]

Enhanced Features and Developer Tools

OpenAI o3-mini is the organization's first compact reasoning model to incorporate several highly requested features for developers:

Function Calling: Allows the model to interact with external functions, enabling more dynamic and context-aware responses.

Structured Outputs: Facilitates the generation of responses in predefined formats, enhancing consistency and reliability.

Developer Messages: Provides tailored messages to assist developers in integrating and utilizing the model effectively.

These features make o3-mini ready for immediate deployment in various applications. Additionally, like its predecessor o1-mini, o3-mini supports streaming, allowing for real-time data processing and response generation.

[Read More: OpenAI’s Latest o3 Model: What to Expect in Access and Pricing]

Adjustable Reasoning Effort

A notable innovation in o3-mini is the introduction of adjustable reasoning effort levels—low, medium, and high. This flexibility enables the model to allocate computational resources based on the complexity of the task at hand:

Low Effort: Prioritizes speed, suitable for straightforward tasks where quick responses are essential.

Medium Effort: Balances speed and accuracy, ideal for general-purpose applications.

High Effort: Allocates more computational resources for complex challenges, enhancing the model's problem-solving capabilities.

This adaptability allows developers to optimize the model's performance according to specific use cases and requirements.

[Read More: OpenAI's 12 Days of AI: Innovations from o1 Model to o3 Preview and Beyond]

Availability and Access

Starting from January 31, o3-mini is being rolled out to select developers in API usage tiers 3-5 through the Chat Completions API, Assistants API, and Batch API. ChatGPT Plus, Team, and Pro users can also access o3-mini immediately, with Enterprise access scheduled for February. Notably, o3-mini will replace o1-mini in the model selection interface, offering higher rate limits and lower latency. As part of this upgrade, OpenAI is tripling the rate limit for Plus and Team users from 50 to 150 messages per day. Pro users will have unlimited access to both o3-mini and the more advanced o3-mini-high variant.

For the first time, free plan users can experience o3-mini by selecting 'Reason' in the message composer or by regenerating a response, marking a significant step in making advanced AI tools more accessible to a broader audience.

[Read More: OpenAI's o3 Model: Transforming the Landscape of Software Development]

Performance and Efficiency

OpenAI reports that o3-mini, with medium reasoning effort, matches the performance of the earlier o1 model in areas such as mathematics, coding, and science, while delivering faster responses. Evaluations by expert testers indicate that o3-mini produces more accurate and clearer answers, with stronger reasoning abilities, compared to o1-mini. Testers preferred o3-mini's responses over o1-mini 56% of the time and observed a 39% reduction in major errors on challenging real-world questions.

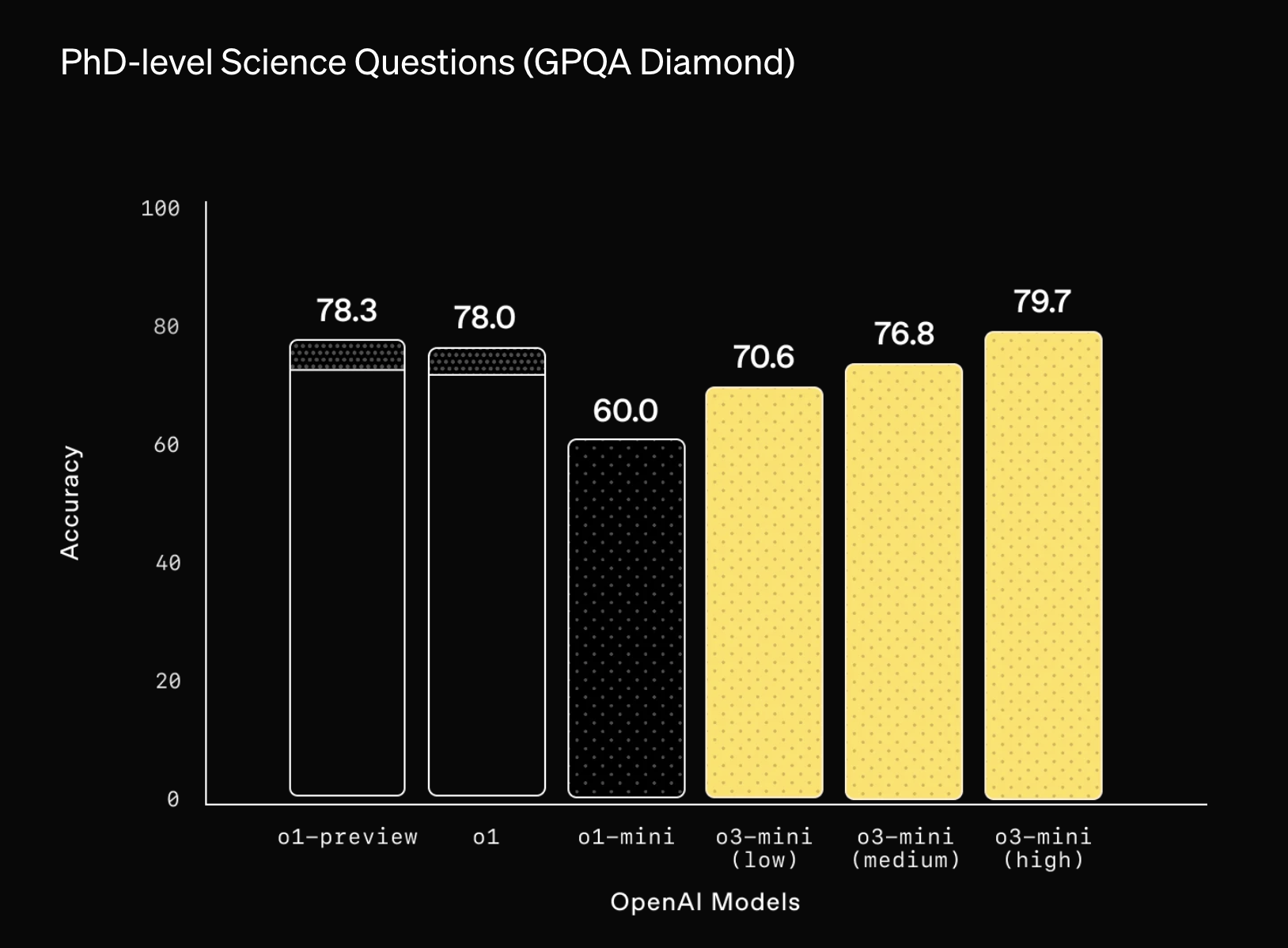

Benchmark tests further demonstrate o3-mini's capabilities:

Competition Math (AIME 2024): With low reasoning effort, o3-mini achieves comparable performance to o1-mini. At medium effort, it matches o1's performance, and with high effort, it surpasses both predecessors.

PhD-level Science: On advanced biology, chemistry, and physics questions, o3-mini with low effort outperforms o1-mini. With high effort, it achieves performance comparable to o1.

Research-level Mathematics: With high reasoning effort, o3-mini solves over 32% of problems on the FrontierMath benchmark on the first attempt, including more than 28% of the most challenging problems.

Competition Coding: On Codeforces competitive programming tasks, o3-mini achieves progressively higher scores with increased reasoning effort, matching o1's performance at medium effort.

Software Engineering: o3-mini-high is OpenAI's highest-performing released model on the SWEbench-verified benchmark.

In terms of speed, o3-mini delivers responses 24% faster than o1-mini, with an average response time of 7.7 seconds compared to 10.16 seconds.

[Read More: ChatGPT Marks Two Years as Academic Tool: Boosting Productivity Amidst Concerns]

Source: OpenAI

The Data Cutoff Controversy: o3-mini vs. ChatGPT-4o

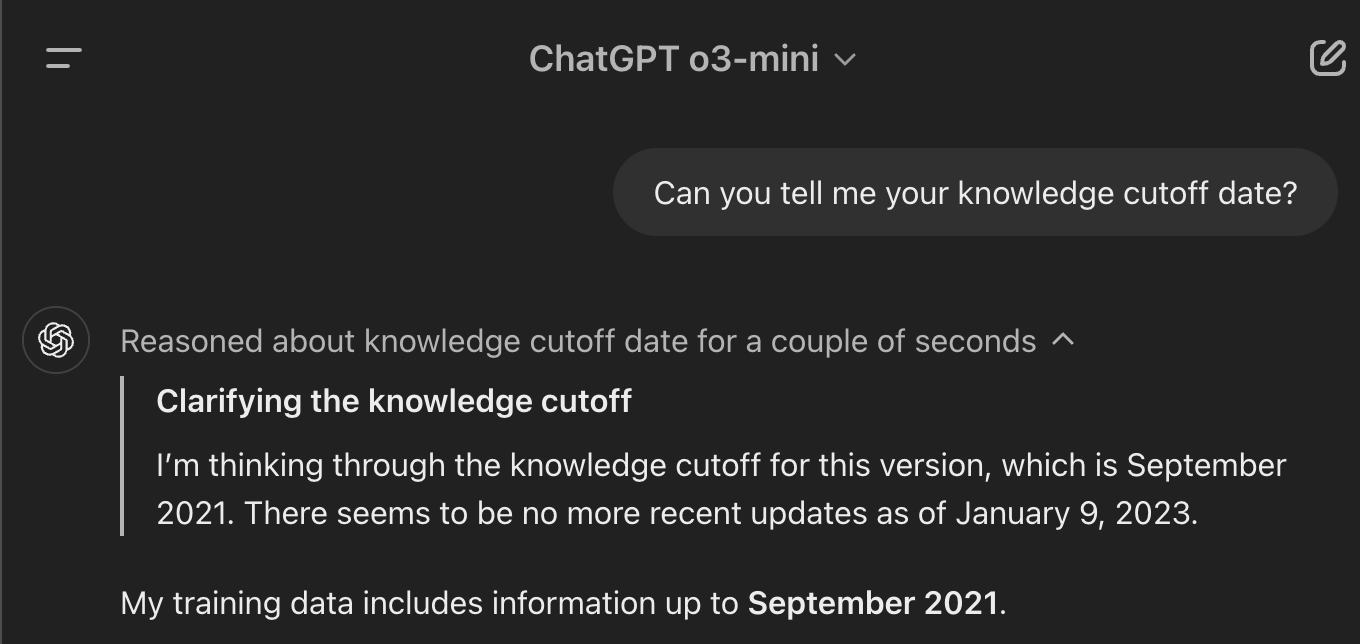

One surprising aspect of o3-mini is its data cutoff date. Unlike ChatGPT-4o, which was trained on data up until June 2024, we directly asked o3-mini about its own knowledge cutoff on February 3, and it responded with September 2021 as its cutoff date. This immediately raised concerns about its ability to process recent information and real-world events.

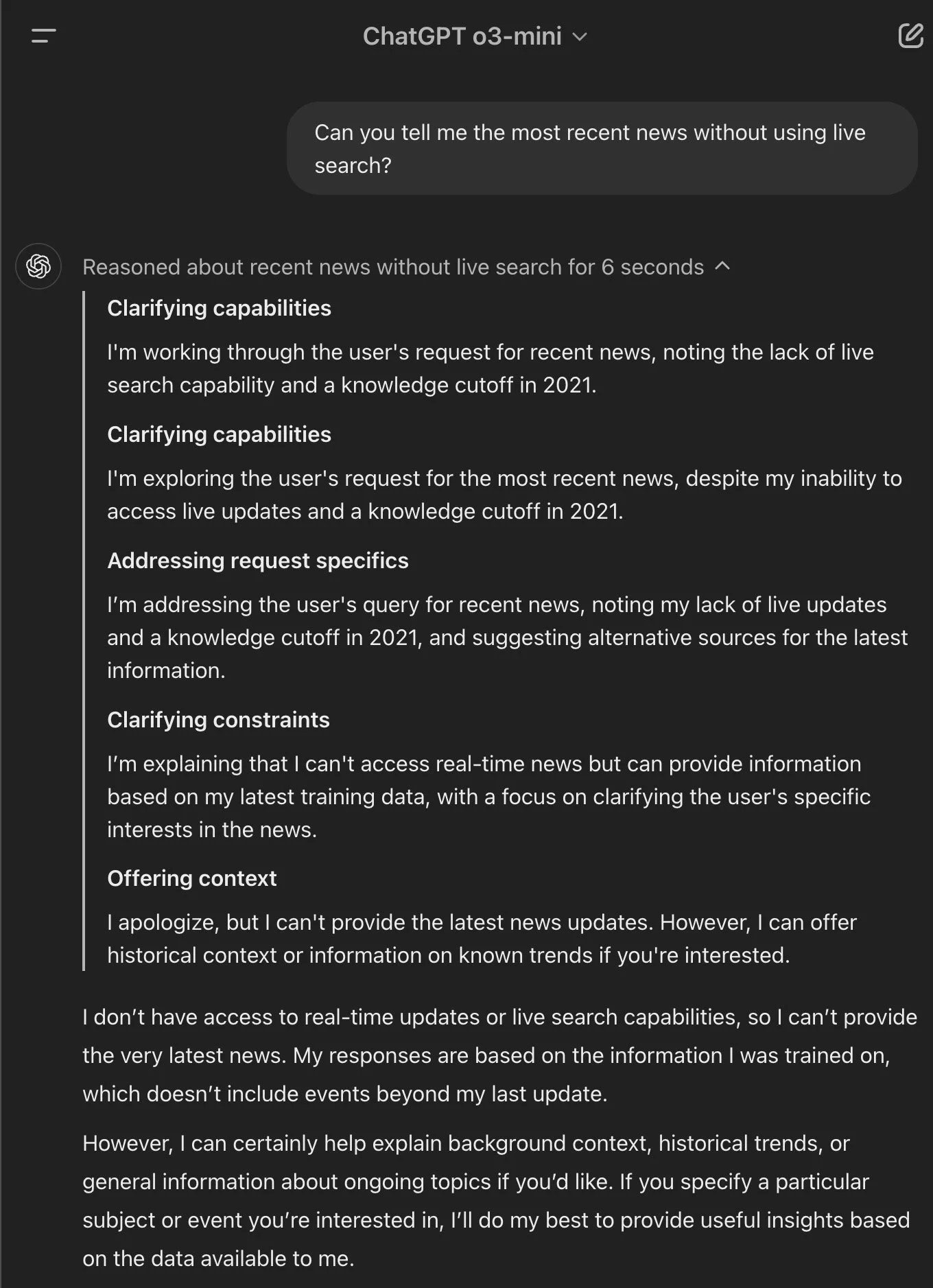

ChatGPT o3-mini's response to the question: 'Can you tell me the most recent news without using live search?’

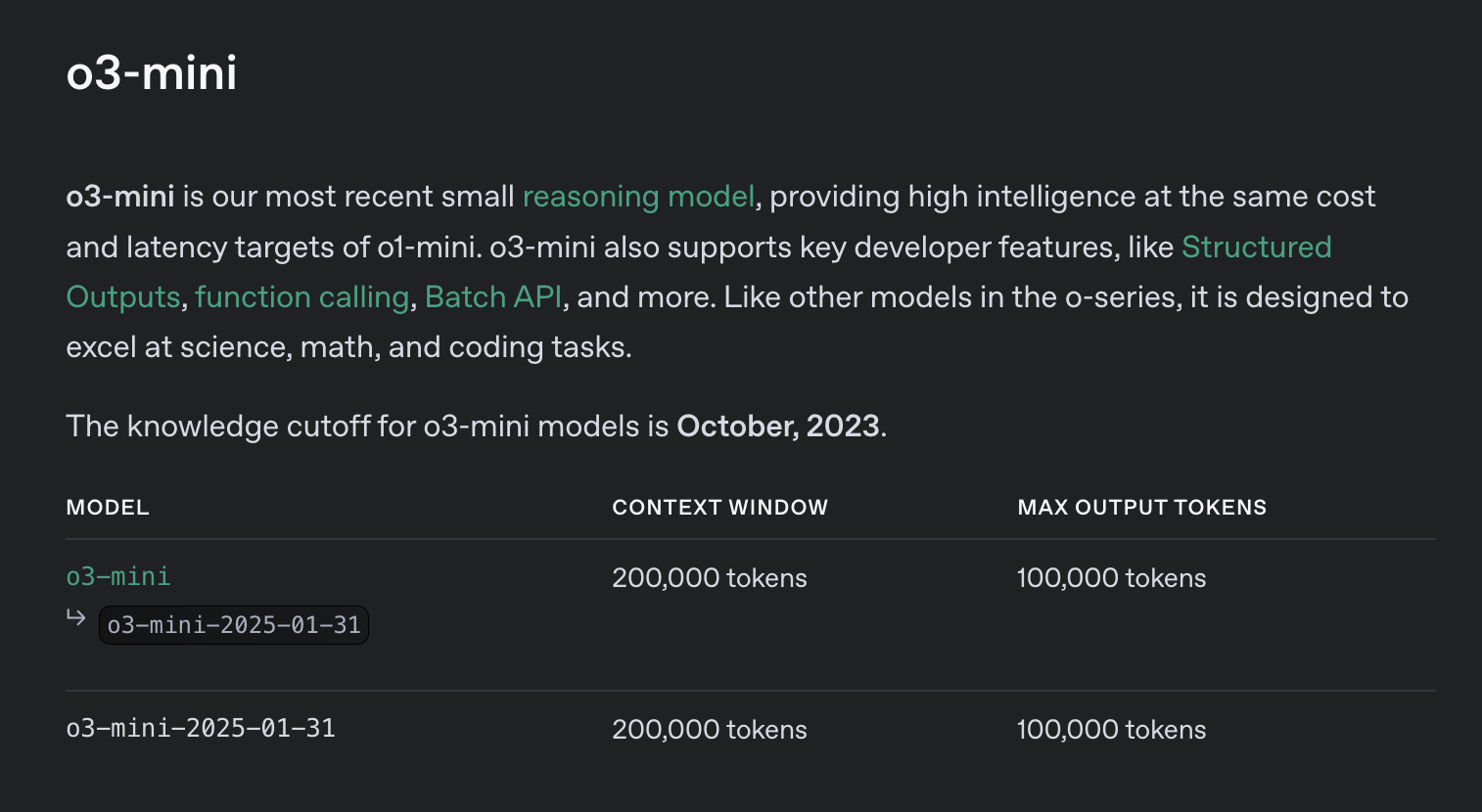

However, OpenAI’s official documentation contradicts this response, stating that the actual knowledge cutoff for o3-mini is October 2023.

While October 2023 is still nearly a year behind ChatGPT-4o’s June 2024 cutoff, it is significantly more recent than September 2021.

Information captured from the OpenAI Platform.

This discrepancy suggests that either o3-mini is not fully aware of its own training data or there may be inconsistencies in how OpenAI implements and communicates model cutoffs.

ChatGPT o3-mini's response to the question: ‘Can you tell me your knowledge cutoff date?’

Source: OpenAI